Graphical

User Interface

The System

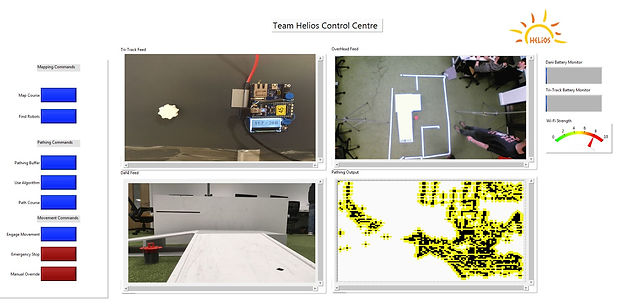

The graphical user interface (GUI) presents a range of information derived from multiple sub-systems on a straightforward, easy to read dashboard allowing the user to easily operate the entire system from one place. Further to this it provides the user with necessary updates as the mission progresses. The GUI consists of a series of buttons, allowing the user to direct or override the system at any point if required. They include a button to start the various system processes, locate the systems, an emergency stop and direct override as well as options to modify the system settings.

The GUI displays four live video feeds;

-

A live feed of the camera attached to the overhead Iris system.

-

A live feed of the webcam attached to the Kratus system.

-

A live feed of the webcam attached to the Janus system.

-

A real-time update of the route-finding process.

It also updates the user on the systems progress as it proceeds through the mission, for example a text notification that the system has started to deploy the bridge or if wildlife sounds are detected the user interface will display an image taken from the same direction the sound is detected.

Finally, the battery level of each system is displayed as well as the WiFi strength. Although the current system cannot accurately determine these levels, they are included in the GUI as these features are under development and would be present in the post-prototype stage.

The System’s Integration Platform

For the complete system to operate autonomously a platform was developed by team Helios that integrated the base station programs into the system components on site. This platform is simple to operate with a simple running order of commands preparing the system for use and activating the drones. The other major component this UI integrates is the pathing software described in the navigation section.

Capabilities Demonstrated

-

Human Factors - Human factors were of paramount importance while developing the GUI, with significant thought given to the users operating the system. The blue and red colours used will allow colour blind users to operate and clear large writing was used to allow easy reading. Furthermore, the one-screen design is used to make the system as convenient as possible for the user.

-

Providing the control centre with mission and technical status updates - The GUI displays three camera feeds from overhead and the robots to provide the user with as many views as possible of the scenario. There is pop up notifications for when the ultrasonic sensors detect that the robots are too close to walls or falls.

-

Careful Handling - The large amount of tools available for the user determine that they can use the systems as they see fit. With the addition of pop up notifications, manual override, emergency stop button, battery life and WiFi indicators, the user will always feel in control of the robots.